System Failure 101: 7 Shocking Causes and How to Prevent Them

Ever felt the ground drop beneath you when your computer crashes, the power goes out, or a flight gets canceled? That’s the chilling reality of a system failure. It’s not just a glitch—it’s a breakdown that can ripple across industries, lives, and economies.

What Exactly Is a System Failure?

At its core, a system failure occurs when a system—be it mechanical, digital, biological, or organizational—ceases to perform its intended function. This can range from a minor hiccup to a catastrophic collapse. The term ‘system’ is broad, covering everything from your smartphone’s operating system to national power grids.

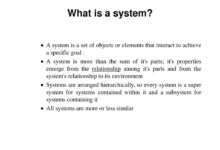

Defining System and Failure

A ‘system’ is any interconnected set of components working together to achieve a goal. When one or more components fail to operate correctly, the entire system may falter. The failure doesn’t always mean total shutdown; sometimes, it’s a degradation in performance that leads to inefficiency or risk.

- A system can be technical (like a server network), social (like a government agency), or natural (like an ecosystem).

- Failure is not always sudden—it can be gradual, masked by redundancy or temporary fixes.

- System failure often results from a chain of small errors rather than one single flaw.

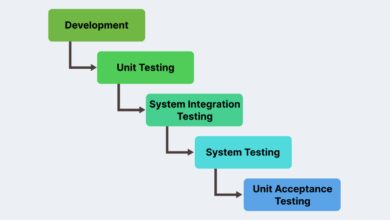

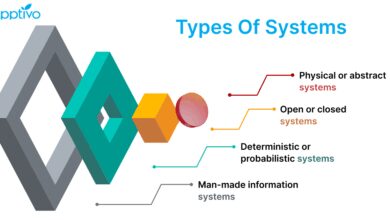

Types of System Failures

System failures are categorized based on their nature, scope, and impact. Understanding these types helps in diagnosing and preventing future issues.

- Hardware Failure: Physical components like hard drives, processors, or power supplies stop working. Example: A server’s fan fails, causing overheating and shutdown.

- Software Failure: Bugs, crashes, or design flaws in code. Example: A banking app freezes during a transaction.

- Human Error: Mistakes made by operators or users. Example: An engineer misconfigures a firewall, exposing the network to attacks.

- Environmental Failure: External factors like floods, fires, or electromagnetic interference disrupt operations.

“Failures are finger posts on the road to achievement.” – C.S. Lewis

Common Causes of System Failure

Behind every system failure lies a root cause—or often, a cascade of them. Identifying these is the first step toward building resilience.

Poor Design and Engineering Flaws

Many system failures stem from fundamental design weaknesses. When systems are built without sufficient testing, scalability, or redundancy, they’re vulnerable from the start.

- The NASA Mars Climate Orbiter failed in 1999 because one team used metric units while another used imperial—proof that even elite organizations aren’t immune.

- Designing without user behavior in mind can lead to misuse and failure. For example, overly complex interfaces increase the chance of human error.

Software Bugs and Glitches

In our digital world, software is the backbone of most systems. A single line of faulty code can bring down an entire network.

- The 2012 Knight Capital Group incident saw a software glitch wipe out $440 million in 45 minutes due to an untested algorithm deployment.

- Buffer overflows, memory leaks, and race conditions are common coding errors that can trigger system failure.

- Regular code audits and automated testing are essential to catch these before deployment.

Hardware Degradation and Obsolescence

Physical components wear out. Hard drives fail, batteries degrade, and circuits corrode. Ignoring maintenance schedules invites disaster.

- Data centers often use RAID arrays to mitigate disk failure, but even redundancy has limits.

- Legacy systems, like outdated hospital equipment or air traffic control software, are especially prone to failure due to lack of support and compatibility issues.

- Proactive replacement and monitoring tools (like SMART for hard drives) help predict and prevent hardware-related system failure.

System Failure in Critical Infrastructure

When critical systems fail, the consequences can be life-threatening. Power grids, transportation, healthcare, and communication networks are all vulnerable.

Power Grid Collapse

Electricity is the lifeblood of modern society. A failure in the power grid can paralyze cities and endanger lives.

- The 2003 Northeast Blackout affected 55 million people across the U.S. and Canada due to a software bug and poor monitoring.

- Overloaded transformers, cyberattacks, and extreme weather can all trigger cascading failures.

- Smart grids with real-time monitoring and self-healing capabilities are being developed to prevent future outages.

Transportation System Breakdowns

From air traffic control to railway signaling, transportation relies on complex, interdependent systems.

- In 2015, a software update caused Amtrak’s Northeast Corridor signaling system to fail, stranding thousands.

- Airport baggage handling systems, when down, can delay flights and cause chaos.

- Autonomous vehicles face unique risks—sensor failure or AI misjudgment could lead to fatal accidents.

Healthcare System Failures

Hospitals depend on integrated systems for patient records, diagnostics, and life support. A failure here can be fatal.

- In 2020, a ransomware attack on Universal Health Services disrupted operations across 400 facilities, forcing staff to revert to paper records.

- Misconfigured medical devices or software bugs in imaging systems can lead to misdiagnosis.

- Redundancy, encryption, and regular security updates are non-negotiable in healthcare IT.

The Role of Human Error in System Failure

Despite advanced technology, humans remain a critical—and often weakest—link in system reliability.

Miscommunication and Coordination Breakdown

Poor communication between teams can lead to catastrophic errors, especially in high-stakes environments.

- The 1986 Challenger disaster was partly due to engineers’ warnings about O-ring failure being ignored by NASA management.

- In hospitals, miscommunication during shift changes can result in medication errors or missed diagnoses.

- Standardized protocols and checklists (like those used in aviation) reduce the risk of miscommunication.

Training and Skill Gaps

Even the best systems fail when operators lack the knowledge to manage them.

- A 2019 report by IBM found that 95% of cybersecurity breaches involve human error, often due to inadequate training.

- Employees may bypass security protocols if they don’t understand the risks, such as clicking phishing links.

- Ongoing training, simulations, and certification programs are vital for maintaining system integrity.

Overconfidence and Complacency

When systems run smoothly for long periods, organizations may become complacent, neglecting maintenance and risk assessment.

- The 2010 Deepwater Horizon oil spill was preceded by ignored safety warnings and a culture of overconfidence in safety systems.

- Regular stress-testing and ‘red team’ exercises help uncover hidden vulnerabilities.

- Leadership must foster a culture of vigilance, where reporting near-misses is encouraged, not punished.

Cybersecurity Threats Leading to System Failure

In the digital age, cyberattacks are a leading cause of system failure. Malicious actors can exploit vulnerabilities to disrupt, steal, or destroy.

Ransomware and Data Encryption Attacks

Ransomware encrypts critical data, demanding payment for its release. The result is operational paralysis.

- The 2021 Colonial Pipeline attack forced a shutdown of fuel supply across the U.S. East Coast, causing panic buying and shortages.

- Backups, endpoint protection, and network segmentation are key defenses.

- CISA recommends zero-trust architecture to minimize attack surfaces.

DDoS Attacks and Service Disruption

Distributed Denial of Service (DDoS) attacks flood systems with traffic, making them inaccessible.

- In 2016, the Mirai botnet attacked Dyn, a DNS provider, taking down Twitter, Netflix, and Reddit.

- Cloud-based DDoS protection services can absorb and filter malicious traffic.

- Monitoring traffic patterns helps detect and mitigate attacks early.

Insider Threats and Data Breaches

Not all threats come from outside. Employees or contractors with access can intentionally or accidentally cause system failure.

- The 2013 Target breach was initiated by a third-party HVAC vendor’s compromised credentials.

- Role-based access control (RBAC) limits exposure by granting permissions based on job function.

- User behavior analytics (UBA) can detect anomalous activity before it leads to failure.

System Failure in Complex Adaptive Systems

Some systems, like financial markets, ecosystems, or social networks, are not just complex—they adapt and evolve. Their failures are harder to predict and control.

Financial Market Crashes

Markets are driven by human behavior, algorithms, and global events. A small trigger can cause a domino effect.

- The 2008 financial crisis was a system failure caused by risky lending, poor regulation, and interconnected financial instruments.

- High-frequency trading algorithms can amplify volatility, as seen in the 2010 Flash Crash.

- Regulatory oversight and stress-testing financial institutions help prevent systemic collapse.

Ecological System Collapse

Natural systems like coral reefs or rainforests can collapse due to overexploitation, pollution, or climate change.

- The Aral Sea, once the fourth-largest lake, shrank by 90% due to unsustainable irrigation—a human-induced system failure.

- Tipping points, once crossed, can make recovery impossible.

- Conservation efforts and sustainable practices are critical to preventing irreversible damage.

Social and Political System Breakdowns

Governments, institutions, and societies can fail when trust erodes, corruption spreads, or leadership falters.

- The collapse of the Soviet Union was a system failure rooted in economic stagnation and political rigidity.

- Modern democracies face challenges from misinformation, polarization, and institutional distrust.

- Transparency, accountability, and civic engagement are essential for system resilience.

How to Prevent and Mitigate System Failure

While not all failures can be avoided, robust strategies can reduce their frequency and impact.

Redundancy and Fail-Safe Mechanisms

Redundancy means having backup components that take over when the primary fails.

- Airplanes have multiple hydraulic systems; if one fails, others maintain control.

- Data centers use redundant power supplies and internet connections.

- Fail-safes, like circuit breakers or emergency shutdowns, prevent damage during failure.

Regular Maintenance and Monitoring

Preventive maintenance catches issues before they escalate.

- Industrial equipment uses predictive maintenance with sensors to monitor vibration, temperature, and wear.

- IT systems benefit from automated patching, log analysis, and intrusion detection.

- Scheduled audits ensure compliance and identify weak points.

Incident Response and Recovery Planning

When failure occurs, a clear response plan minimizes downtime and damage.

- Disaster recovery plans (DRPs) outline steps to restore operations after an outage.

- Business continuity planning (BCP) ensures critical functions continue during a crisis.

- Regular drills and tabletop exercises test readiness and improve coordination.

Case Studies of Major System Failures

History is filled with lessons from system failures. Studying them helps us build better systems.

Three Mile Island Nuclear Accident (1979)

A partial meltdown at the Pennsylvania plant was caused by a combination of mechanical failure and operator error.

- A stuck valve led to coolant loss, but misleading indicators confused the control room team.

- The incident highlighted the need for better training, clearer instrumentation, and safety culture.

- It led to sweeping changes in nuclear regulation and operator protocols.

Facebook Outage of 2021

For six hours, Facebook, Instagram, and WhatsApp went dark due to a BGP (Border Gateway Protocol) misconfiguration.

- A routine command inadvertently disconnected Facebook’s servers from the internet.

- The outage cost the company an estimated $60 million in ad revenue.

- It exposed over-reliance on internal systems and lack of external access during crises.

Boeing 737 MAX Crashes

The 2018 and 2019 crashes of Lion Air and Ethiopian Airlines flights were linked to the MCAS system, which relied on a single sensor.

- When the sensor failed, MCAS repeatedly pushed the nose down, overriding pilots.

- Poor communication, inadequate training, and rushed certification contributed to the disaster.

- Boeing redesigned MCAS and enhanced pilot training before the plane’s return to service.

What is the most common cause of system failure?

The most common cause of system failure is human error, often compounded by poor design, lack of training, or inadequate communication. According to IBM, 95% of cybersecurity breaches involve human mistakes, and many industrial accidents stem from operator error or misjudgment.

Can system failure be completely prevented?

While it’s impossible to eliminate all risks, system failure can be significantly reduced through redundancy, regular maintenance, robust design, and proactive monitoring. The goal is not perfection but resilience—ensuring systems can recover quickly when failures occur.

How do you recover from a system failure?

Recovery involves identifying the root cause, restoring functionality (often from backups), and implementing corrective actions to prevent recurrence. An incident response plan, clear communication, and post-mortem analysis are critical for effective recovery.

What is the difference between system failure and system error?

A system error is a specific malfunction or warning within a system, often correctable without major disruption. System failure, however, refers to a complete or partial breakdown of the system’s ability to function, usually requiring intervention to restore operations.

How does redundancy help prevent system failure?

Redundancy provides backup components or pathways that take over when the primary system fails. For example, redundant servers ensure a website stays online during hardware failure, and dual flight control systems in aircraft maintain safety if one fails.

System failure is more than a technical glitch—it’s a warning sign of deeper vulnerabilities. Whether caused by design flaws, human error, or cyberattacks, the consequences can be severe. But by understanding the causes, learning from past mistakes, and implementing robust safeguards, we can build systems that are not only resilient but adaptable. The goal isn’t to avoid failure entirely—because failure is inevitable—but to ensure that when it happens, we’re prepared to respond, recover, and improve.

Further Reading: